In a significant move for the AI industry, OpenAI has released its first open-weight models, gpt-oss-120b and gpt-oss-20b. But what does that mean for your business?

In short, it means you can now run powerful, OpenAI-grade AI on your own hardware. This unlocks new opportunities for companies that prioritise data privacy, deep customisation, and predictable costs. Instead of sending your data to a third-party API, you can keep it all in-house.

Let’s break down why this is a major development and how it could impact your business strategy.

Why This is a Big Deal for Your Business

Until now, using OpenAI’s most powerful models meant relying on their cloud-based API. The new GPT-OSS models change that, offering several key advantages:

- Full Data Control & Privacy: By self-hosting the AI, your proprietary data never has to leave your secure environment. This is critical for companies in finance, healthcare, law, or any industry with strict data compliance and confidentiality needs.

- Deeper Customisation & Brand Alignment: An open model can be fine-tuned on your company’s specific data, documents, and past communications. Imagine an AI assistant that not only understands your internal jargon but also drafts content perfectly in your brand’s unique voice.

- Predictable Costs & No Vendor Lock-In: While API usage fees can fluctuate with demand, running a self-hosted model moves the cost to your own infrastructure. For high-volume use cases, this can lead to a more predictable and potentially lower total cost of ownership.

- Powerful, Custom-Built Automations: These models are designed for “agent workflows”—driving actions, not just generating text. Thanks to features like Structured Outputs, they can deliver clean data ready to be used instantly by your other business systems, making for incredibly reliable automations.

- Tunable Performance to Match the Task: You can adjust the model’s “reasoning effort” from low to high. This gives you granular control, allowing you to prioritise speed and low cost for simple tasks, while reserving maximum power for complex analytical work. It’s about applying the right power for the right job.

- Enterprise-Grade Credibility & Security: This isn’t just an experiment. Major enterprises like Snowflake and Orange were early partners, proving the models’ readiness for serious business applications. Furthermore, OpenAI has been transparent about its proactive safety measures, including stress-testing the models against malicious modifications.

Practical Use Cases You Can Implement Now

This isn’t just a theoretical upgrade. Here are some immediate, high-value applications for a self-hosted AI:

- A Hyper-Intelligent Internal Knowledge Base: Feed the model your company handbooks, project histories, and process documents. Your team gets a private, expert assistant that can answer complex questions instantly.

- Secure Customer Support & Sales Assistants: The models have proven their strength in complex, specialised fields, even outperforming some proprietary systems in benchmarks related to health conversations. This demonstrates a robustness that can be trusted for high-stakes B2B or B2C interactions.

- Private Content & Marketing Drafts: Generate marketing copy, reports, and internal communications securely. Fine-tune the model to ensure every output is perfectly aligned with your brand voice and messaging strategy.

- Automated Data Analysis & Reporting: With the ability to process very large documents (up to a 128k context length), the AI can analyze lengthy reports or datasets in a single pass before connecting to your internal databases to generate insights.

Can You Run This Yourself?

Absolutely – while the smaller gpt-oss-20b model is designed to run on high-end consumer hardware, deploying these models for reliable business use requires technical expertise.

- The Small Model

(gpt-oss-20b): This is proving to be really powerful, it is running well on macbook pros, including M2, M3 & M4, potentially best for prototyping, internal testing, or light-duty ai tasks. - The Large Model

(gpt-oss-120b): Requires server-class hardware. This is the model you’d want for production-grade, heavy-lifting business applications.

Setting up, optimising, and securing these models is a significant technical undertaking. Performance depends heavily on the hardware, software stack, and the specific workload.

Remember you will need at least 16GB of RAM, but ideally a lot more, the models work best and faster when they can be entirely held in RAM / VRAM and with Apple Silicon the unified architecture allows for the memory bandwidth. Memory bandwidth is important with these models as well as your RAM.

How do you set it up?

Great, you are ready to set this up or test it on your machine – you can go to the GPT OSS Playground here. You will be able to try each model for yourself and then press the download button, you can opt for setting it up in either in Hugging Face, Ollama & LM Studio from there and then decide which option you would prefer.

We recommend trialing Ollama or LM Studio if you are not tech savvy, also if your machine does not have enough ram or memory bandwidth you will notice it perform very slow, you may want to quantise the model.

With Ollama or LM Studio everything is done for you (mostly!), ready for you to take your first ever self hosted LLM for a test drive.

How does it compare?

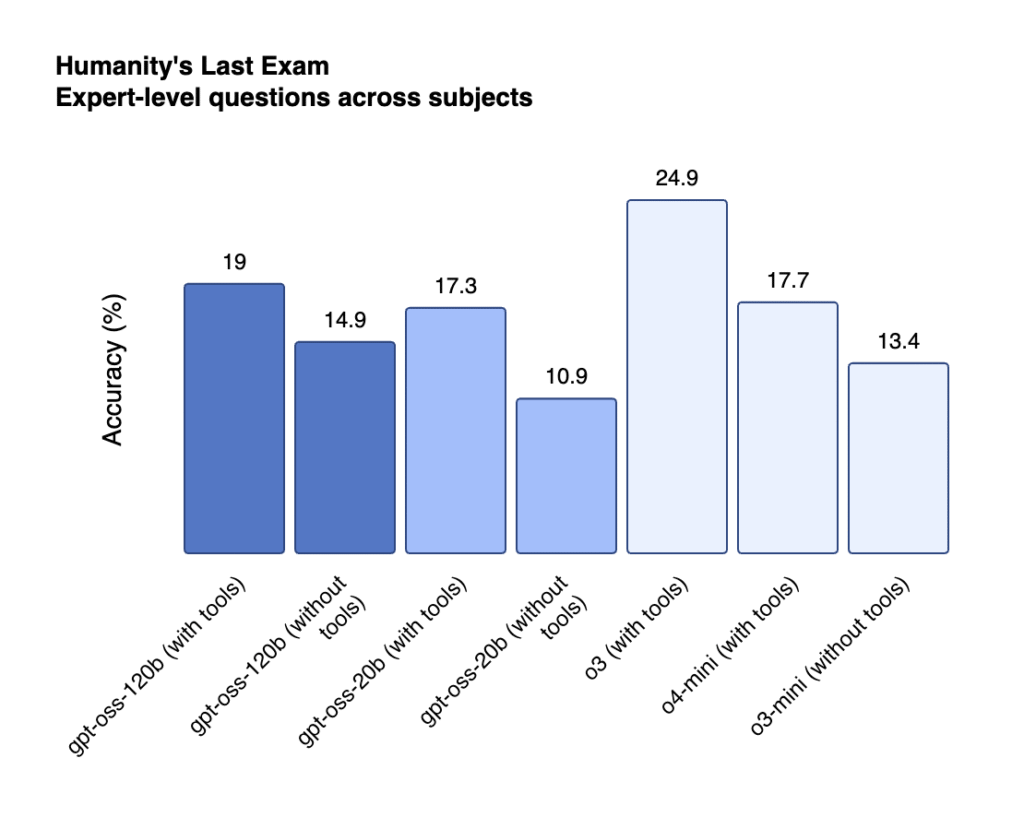

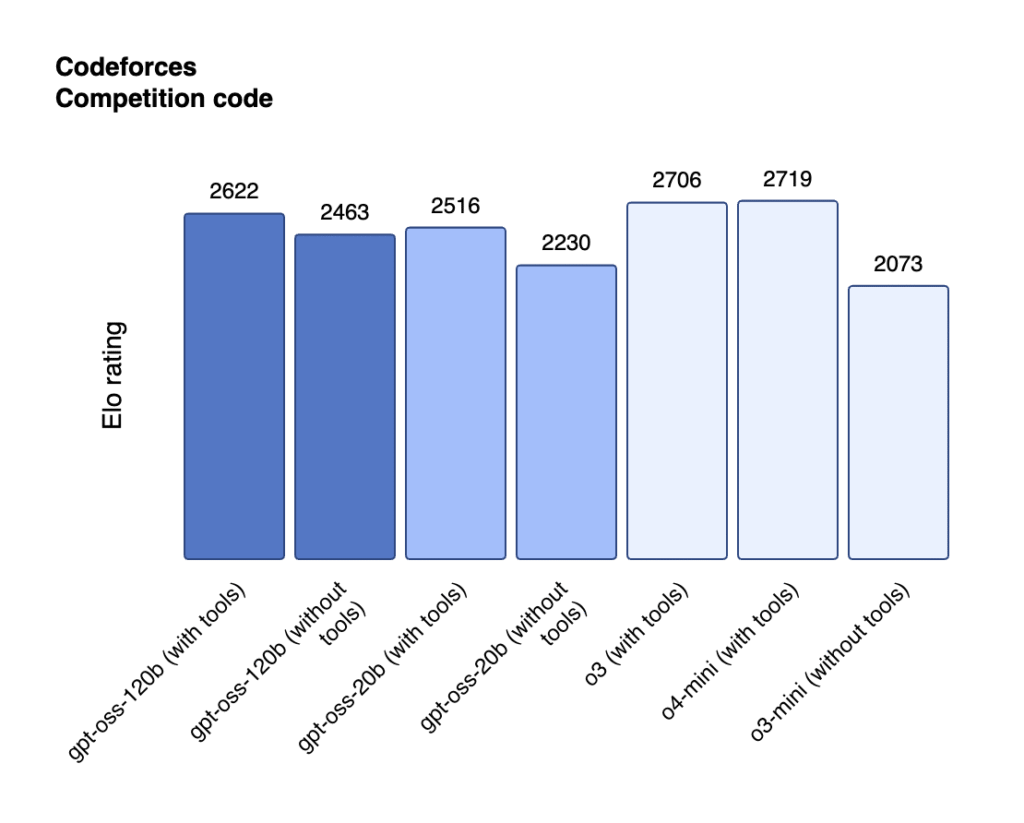

Here are some charts from OpenAI’s official post which shows the two models compared with the current models available. The tools function, which we know by now to be essential with agentic workflows is impressive for their first open source models in comparison to what their current model capability offer.

How Design for Online Can Help

The release of GPT-OSS models creates a powerful new strategic option for businesses ready to take the next step in their AI journey. But turning potential into performance requires a clear plan.

At Design for Online, we specialise in:

- AI Strategy Consulting: We’ll help you determine if a self-hosted model is the right fit for your goals and map out a clear implementation roadmap.

- Custom AI Integration: We can build and deploy private AI solutions that integrate seamlessly with your existing tools and workflows.

- Workflow Automation: Let us design and implement the AI-powered agents that will automate your marketing, sales, and operational processes, giving you a decisive competitive edge.

Ready to explore how a private, custom-trained AI could transform your business? Contact us for a consultation today.